Things like keeping hot data in SSD (solid-state drives). To get high performance, you need to treat the data lake as a database engine would. Metadata is not good enough on its own for good performance (although it can help for some things, like the “many small files” issue). Note that in general this metadata layer will allow zero-copy: if the format you have stored already in the cloud is the same as that of the lakehouse, adding data is just adding a line to the metadata with “the data is these files”, hence zero copy. Also, a metadata layer should have no significant performance impact. This layer is good to keep track of transactions (a good step to build ACID features), and allow time travel, data quality enforcement, and auditing. Some similar projects, Apache Iceberg and Apache Hudi can also be considered lakehouse implementations, the ideas behind them are similar to Delta Lake.Īll these systems are based on adding a metadata layer on top of cloud storage. If you are curious, I summarised the Delta Lake paper here. It uses S3 (or some other cloud storage) as a cheap storage layer, Parquet as the open storage format and a transactional metadata layer. Of course, sounds good.ĭatabricks' Delta Lake (which is open source, although Databricks has some internal extensions) is one possible lakehouse implementation. Features like ACID, versioning, indexing, caching and query optimisation. Using low cost and directly accessible cloud storage, while offering DBMS features.

#Lakehouse databricks paper full#

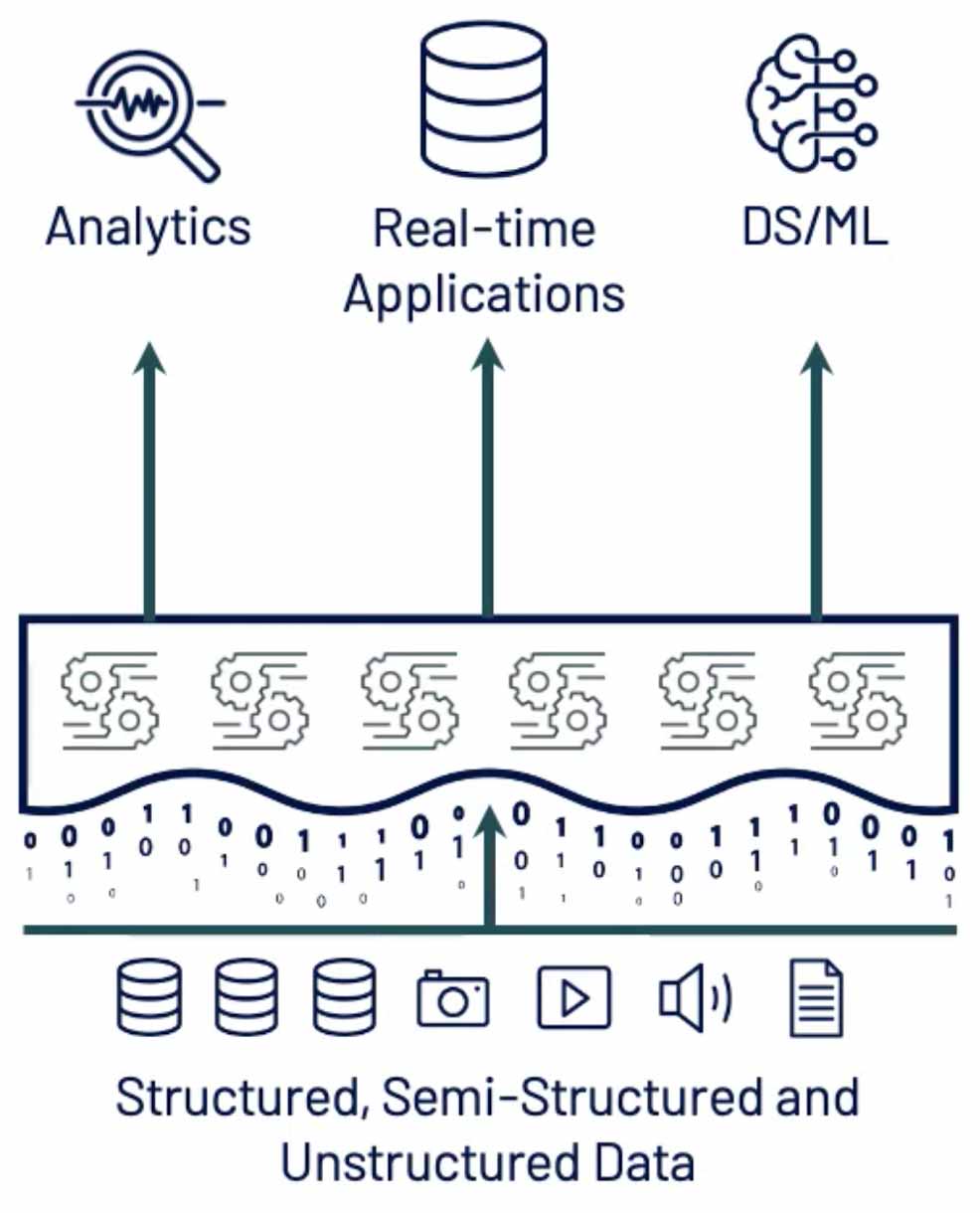

One issue with them: these engines lack full ACID guarantees ( Atomicity-Consistency-Isolation-Durability).Ī lakehouse combines these ideas. Also, a surge of investment in SQL engines against data lakes, like SparkSQL, Presto (now known as Trino), Apache Hive and AWS Athena. Data warehouses were adding support for external tables, to be able to access data stored in a data lake in Parquet or ORC. Some trends are already converging to similar solutions.

And the final straw is the total cost of ownership: you pay for storage twice, once for the data lake and again for the warehouse.Īn obvious solution would be what if we could use the lake as if it was a data warehouse? Warehouses were ready for BI, lakes for bulk access. There would be limited support for machine learning. This means that you have to keep reliability of your ETL system up, and prevent the warehouse from becoming stale.

The problem with this organisational layout, and a persistent headache most data engineers share, is that you have two systems that need to be kept in sync. The datalake+data warehouse architecture became dominant. The data warehouse layer likewise changed to cloud offerings like AWS Redshift or Snowflake. This change increased durability, reduced cost and offered archival layers, like S3 Glacier. Data would be dumped in the lake, and copied via ETL ( extract-transform-load) or ELT (just swap letters).Īfter some time, cloud-based systems (Amazon’s S3, Azure’s Blob Storage, Google’s Cloud Storage) replaced HDFS as storage layer in the data lake. The quintessential and initial example would be HDFS ( Hadoop Distributed Filesystem). A data lake is a high-volume storage layer, using low-cost storage and a file API with files stored using an open format. This is in contrast with schema on read, where you just save data and specify what you want to load from it when reading. The basic example would be inserting data into a table: the data needs to conform with the table schema. Schema on write means that the data is saved according to a specified schema.

0 kommentar(er)

0 kommentar(er)